SemEval-2015 Task 5: QA TempEval

RESULTS RECALCULATED TO MATCH THE COMMON DEFINITION OF 'RECALL' MEASURE (2015-03-09).

Detailed task description: PDF (Mar 9, 2015)

INTRODUCTION

QA TempEval is a follow up of TempEval series in SemEval. It introduces a major shift in the evaluation methodology, which changes from temporal information extraction to temporal question-answering (QA). QA represents a natural way to evaluate temporal information understanding. The task for the participating systems remains extracting temporal information from plain text documents. However, instead of comparing systems' output to a human annotated key, it is used to build a knowledge base for obtaining answers for temporal questions about the documents and compare them to a human answer key. QA score measures performance in terms of the capacity of an approach to capture temporal information relevant to perform an end-user task, as compared with corpus-based evaluation where all information is equally important for scoring.

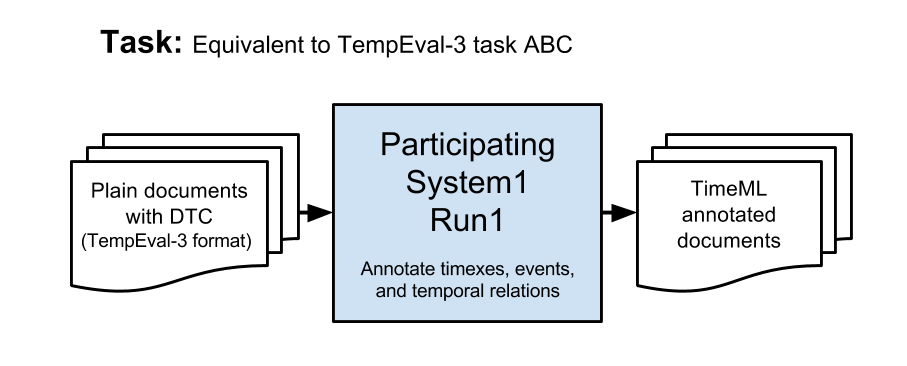

TASK DESCRIPTION

The task is equivalent to TempEval-3 task ABC. A set of plain text documents is given to participants. Their systems are required to annotate them following TimeML scheme, which is divided in two main types of elements.

Temporal entities: These include events (EVENT tag) and temporal expressions (timexes, TIMEX3 tag) as well as their attributes such as event classes and timex types and normalized values.

Temporal relations: A temporal relation (TLINK tag) describes a pair of entities and the temporal relation between them. TimeML relations can be mapped to the 13 Allen interval relations as follows: SIMULTANEOUS and IDENTITY (equal), BEFORE (before), AFTER (after), IBEFORE (meets), IAFTER (meet-by), IS INCLUDED (during), INCLUDES (contains) and DURING (-), BEGINS (starts), BEGUN BY (started by), ENDS (finishes), ENDED BY (finished by), - (overlaps), - (overlapped by). For example, in (2), “6:00 pm” begins the state of being “in the gym”.

(2) John was in the gym between 6:00 p.m and 7:00 p.m.

Note that TimeML does not explicitly include the Allen’s overlap and overlapped by relations. However, these relations can be present in the temporal representation of a TimeML document by the combination of other relations. Also note that DURING has no clear mapping to an Allen relation so we decided to map it to (equal) for simplicity.

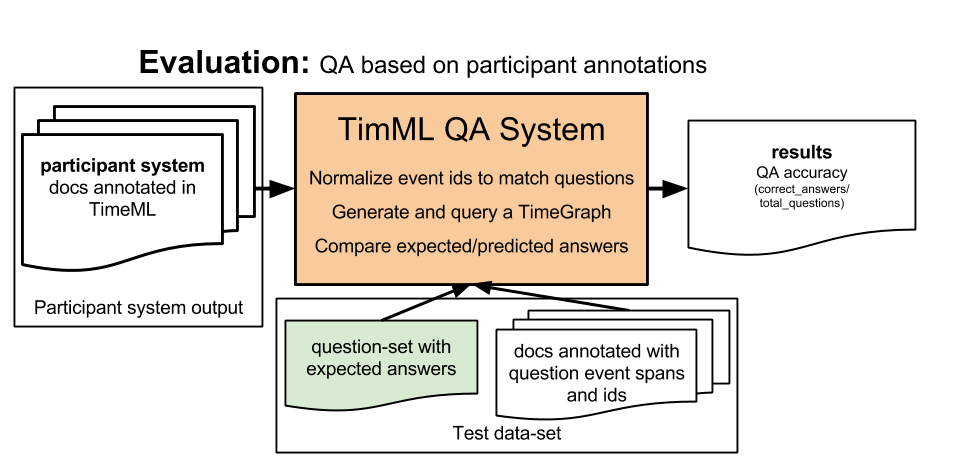

EVALUATION DESCRIPTION

The novelty is that instead of evaluating system annotations against a key human annotation, they are evaluated as source information for QA task. The score measures how many temporal questions can be answered correctly given the annotation. In other words, the score measures how useful are paticipant annotations for the QA system to understand the temporal information contained in the text and obtain the correct answers.

Questions and answers will be manually annotated by human annotators after reading the source text documents.

REFERENCES

J. Pustejovsky, J. M. Castao, R. Ingria, R. Sauri, R. J. Gaizauskas, A. Setzer, G. Katz, and D. R. Radev, “TimeML: Robust Specification of Event and Temporal Expressions in Text.” in New Directions in Question Answering, M. T. Maybury, Ed. AAAI Press, 2003, pp. 28–34.

UzZaman et al “Semeval-2013 task 1: Tempeval 3,” in Proceedings of International Workshop on Semantic Evaluations (SemEval 2013), 2013.

M. Verhagen, R. Sauri, T. Caselli, and J. Pustejovsky, “Semeval-2010 task 13: Tempeval 2,” in Proceedings of International Workshop on Semantic Evaluations (SemEval 2010), 2010.

J. F. Allen, “Maintaining knowledge about temporal intervals,” Communication ACM, vol. 26, no. 11, pp. 832–843, 1983.

UzZaman, Llorens, and Allen. 2012. Evaluating Temporal Information Understanding with Temporal Question Answering. IEEE ICSC.