Task 1: Paraphrase and Semantic Similarity in Twitter

TASK DESCRIPTION

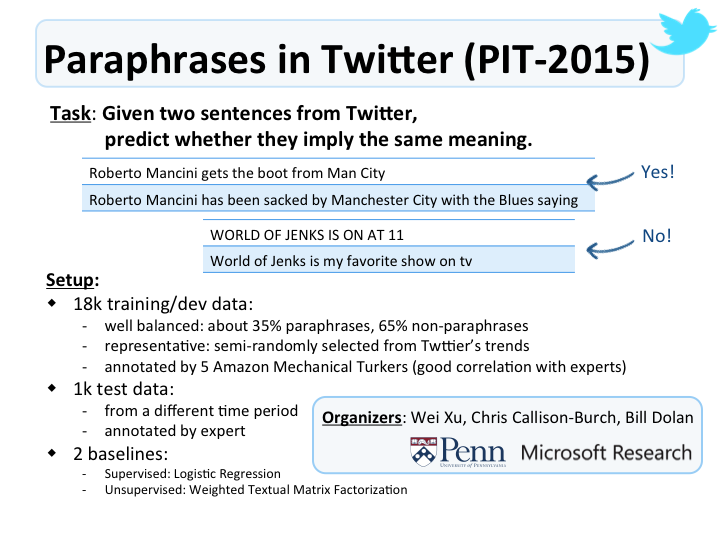

Given two sentences, the participants are asked to determine whether they express the same or very similar meaning and optionally a degree score between 0 and 1. Following the literature on paraphrase identification, we evaluate system performance primarily by the F-1 score and Accuracy against human judgements. We also provide additional evaluations by Pearson correlation and PINC (Chen and Dolan, 2011), which measures lexical dissimilarity between sentence pairs.

EVALUATION

- Updated readme with the evaluation plan. [Must look!]

- Sample test data shows the file format of test data. And its corresponding labels that can be used to try the evaluation script below.

- Sample system output shows the file format required for system output submission.

- Sample evaluation script that provides some basic evaluation metrics, F1 score for binary output and Pearson correlation for degreed output.

- A format checking script that checks system outputs before submission. Outputs that can not pass this check will be excluded from the official evaluation. [Must look!]

- Test data [NEW] is released on Dec 12th, 2014.

- Submission [NEW] Please fill this form to receive a unique submission link for your team in email. Each team can submit up to 2 runs by Dec 18th 11:59pm GMT.

DATA & BASELINE SYSTEMS

- Trial data contains 50 examples.

- Readme describes the training/dev data format and the scripts for baseline systems.

- Training & dev data is ready. Please contact us for the data. This Twitter Paraphrase Corpus was developed by Wei Xu et al. (TACL 2014) via crowdsourcing. It contains about 17,790 annotated sentence pairs, and comes with tokenization (Brendan O'Connor et al., 2010), part-of-speech (Derczynski et al., 2013) and named entity tags (Alan Ritter et al., 2011).

- Baseline system #1 is a logistic regression model using simple lexical features, which is originally used by Dipanjan Das and Noah A. Smith (ACL 2009). It achieves a maximum F-score of 0.6 when trained on train.data and tested on dev.data.

SOME EXTERNAL TOOLS/RESOURCES

- Baseline system #2 is the Weighted Matrix Factorization model developed by Weiwei Guo and Mona Diab (ACL 2012).

- PPDB a large-scale paraphrase database developed by Juri Ganitkevitch, Benjamin Van Durme and Chris Callison-Burch (NAACL 2013).

- Twitter normalization lexicon developed by Bo Han and Timothy Baldwin (ACL 2011).

BACKGROUD

In this shared-task, we will provide a first common ground for development and comparison of Paraphrase Identification systems for the Twitter data. Given a pair of sentences from Twitter trends, participants will be asked to produce a binary yes/no judgement and optionally a graded score to measure their semantic equivalence. These two related but different problems have been studied extensively in the past, because they are critical to many NLP applications, such as summarization, sentiment analysis, textual entailment and information extraction etc. The purpose of running this task is to promote this line of research in the new challenging setting of social media data, and help advance other NLP techniques for noisy user-generated text in the long run.

The ability to identify paraphrase, i.e. alternative expressions of the same meaning, and the degree of semantic similarity has proven useful for a wide variety of natural language processing applications (Madnani and Dorr, 2010). Previously, many researchers have investigated ways of automatically detecting paraphrases. The ACL Wiki gives an excellent summary of the state-of-the-art paraphrase identification techniques. These can be categorized into supervised (Qiu et al., 2006; Wan et al., 2006; Das and Smith, 2009; Socher et al., 2011; Blacoe and Lapata, 2012; Madnani et al., 2012; Ji and Eisenstein, 2013) and unsupervised (Mihalcea et al., 2006; Rus et al., 2008; Fernando and Stevenson, 2008; Islam and Inkpen, 2009; Hassan and Mihalcea, 2011) methods. Most of previous work are on grammatical text. A few recent studies have highlighted the potential and importance of developing paraphrase (Zanzotto et al., 2011; Xu et al., 2013) and semantic similarity techniques (Guo and Diab, 2012) specifically for tweets. They also indicated that the very informal language, especially the high degree of lexical variation, used in social media has posed serious challenges.

REFERENCES

- Bannard, C. and Callison-Burch, C. (ACL 2005). Paraphrasing with Bilingual Parallel Corpora.

- Chen, D. L. and Dolan, W. B. (ACL 2011). Collecting Highly Parallel Data for Paraphrase Evaluation.

- Derczynski, L., Ritter, A., Clark, S., and Bontcheva, K. (RANLP 2013). Twitter Part-of-Speech Tagging for All: Overcoming Sparse and Noisy Data.

- Ganitkevitch, J., Van Durme, B., and Callison-Burch, C. (NAACL 2013). PPDB: The Paraphrase Database.

- Guo, W. and Diab, M. (ACL 2012). Modeling Sentences in the Latent Space.

- Madnani, N. and Dorr, B. J. (Computational Linguistics 2010). Generating Phrasal and Sentential Paraphrases: A Survey of Data-driven Methods.

- O’Connor, B., Krieger, M., and Ahn, D. (ICWSM 2010). Tweetmotif: Exploratory Search and Topic Summarization for Twitter.

- Ritter, A., Clark, S., Mausam, Etzioni, O. (EMNLP 2011). Named Entity Recognition in Tweets: An Experimental Study.

- Wang, L., Dyer, C., Black, A. W., and Trancoso, I. (EMNLP 2013). Paraphrasing 4 Microblog Normalization.

- Xu, W., Ritter, A., Callison-Burch, C., Dolan, W. B. and Ji, Y. (TACL 2014). Extracting Lexically Divergent Paraphrases from Twitter.

- Xu, W., Ritter, A., and Grishman, R. (ACL Workshop BUCC 2013). Gathering and Generating Paraphrases from Twitter with Application to Normalization.

- Xu, W. (PhD Thesis 2014). Data-Drive Approaches for Paraphrasing Across Language Variations. Department of Computer Science, New York University.

- Zanzotto, F. M., Pennacchiotti, M., and Tsioutsiouliklis, K. (EMNLP 2011). Linguistic Redundancy in Twitter.