Task Description: Sentiment Analysis in Twitter

Results:

- The testset can be found here (combines five testsets: Twitter-2013, SMS-2013, Twitter-2014, Twitter-sarcasm-2014 and LiveJournal-2014).

- The official results can be found here.

- Final test scorer + gold labels for the final submissions are here.

- System submissions are here: subtask A and subtask B.

- The task description paper can be found in the ACL Anthology in PDF and BibTex.

Summary:

This is a rerun of SemEval-2013 task 2 (Nakov et al, 2013) with new test data from (i) Twitter and (ii) another (surprise) genre.

Abstract:

In the past decade, new forms of communication, such as microblogging and text messaging have emerged and become ubiquitous. While there is no limit to the range of information conveyed by tweets and texts, often these short messages are used to share opinions and sentiments that people have about what is going on in the world around them. Thus, the purpose of this task rerun is to promote research that will lead to better understanding of how sentiment is conveyed in tweets and texts. There will be two subtasks: an expression-level task and a message-level subtask; participants may choose to participate in either or both subtasks.

- Subtask A: Contextual Polarity Disambiguation

Given a message containing a marked instance of a word or phrase, determine whether that instance is positive, negative or neutral in that context.

- Subtask B: Message Polarity Classification

Given a message, classify whether the message is of positive, negative, or neutral sentiment. For messages conveying both a positive and negative sentiment, whichever is the stronger sentiment should be chosen.

I. Introduction and Motivation

In the past decade, new forms of communication, such as microblogging and text messaging have emerged and become ubiquitous. While there is no limit to the range of information conveyed by tweets and texts, often these short messages are used to share opinions and sentiments that people have about what is going on in the world around them.

Working with these informal text genres presents challenges for natural language processing beyond those typically encountered when working with more traditional text genres, such as newswire data. Tweets and texts are short: a sentence or a headline rather than a document. The language used is very informal, with creative spelling and punctuation, misspellings, slang, new words, URLs, and genre-specific terminology and abbreviations, such as, RT for “re-tweet” and #hashtags, which are a type of tagging for Twitter messages. How to handle such challenges so as to automatically mine and understand the opinions and sentiments that people are communicating has only very recently been the subject of research (Jansen et al., 2009; Barbosa and Feng, 2010; Bifet and Frank, 2010; Davidov et al., 2010; O’Connor et al., 2010; Pak and Paroubek, 2010; Tumasjen et al., 2010; Kouloumpis et al., 2011).

Another aspect of social media data such as Twitter messages is that it includes rich structured information about the individuals involved in the communication. For example, Twitter maintains information of who follows whom and re-tweets and tags inside of tweets provide discourse information. Modeling such structured information is important because: (i) it can lead to more accurate tools for extracting semantic information, and (ii) it provides means for empirically studying properties of social interactions (e.g., we can study properties of persuasive language or what properties are associated with influential users).

SemEval-2013 Task 2 has created a freely available, annotated corpus that can be used as a common testbed to promote research that will lead to a better understanding of how sentiment is conveyed in tweets and texts. The few corpora with detailed opinion and sentiment annotation that have been made freely available before that, e.g., the MPQA corpus (Wiebe et al., 2005) of newswire data, have proved to be valuable resources for learning about the language of sentiment. While a few Twitter sentiment datasets have been created, they were either small and proprietary, such as the i-sieve corpus (Kouloumpis et al., 2011), or relied on noisy labels obtained from emoticons or hashtags. Furthermore, no Twitter or text corpus with expression-level sentiment annotations has been made available before thе one developed for SemEval-2013 Task 2.

SemEval-2013 Task 2 attracted a very large number of participants, who tried a variety of techniques. Thus, we have decided to do a rerun of the task using the same training and development data, but with new test data from (i) Twitter and (ii) another (surprise) domain. We encourage you to read our publication of the task for further information regarding the 2013 evaluation (http://www.aclweb.org/anthology/S/S13/S13-2052.pdf)

II. Task Description

The task has two subtasks: an expression-level subtask and a message-level subtask. Participants may choose to participate in either or both subtasks.

- Subtask A: Contextual Polarity Disambiguation: Given a message containing a marked instance of a word or a phrase, determine whether that instance is positive, negative or neutral in that context. The boundaries for the marked instance will be provided, i.e., this is a classification problem, NOT an entity recognition problem.

- Subtask B: Message Polarity Classification: Given a message, decide whether the message is of positive, negative, or neutral sentiment. For messages conveying both a positive and negative sentiment, whichever is the stronger sentiment should be chosen.

III. Data

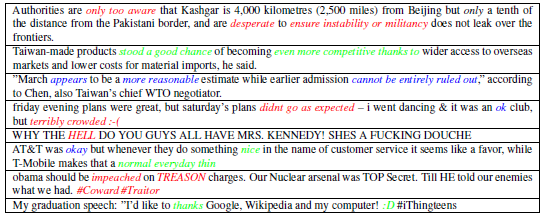

As part of SemEval-2013 task 2 (Nakov et al, 2013), we gathered a corpus of messages on a range of topics, including a mixture of entities (e.g., Gadafi, Steve Jobs), products (e.g., Kindle, Android phone), and events (e.g., Japan earthquake, NHL playoffs). Keywords and Twitter hashtags were used to identify messages relevant to the selected topic. All Twitter datasets were sampled and annotated in the same way. Here is an example (positive phrases are in green, negative phrases are in red, and neutral phrases are in blue):

The following datasets are available for training and development:

- training: 9,728 Twitter messages

- development: 1,654 Twitter messages (can be used for training as well)

- development-test #1: 3,814 Twitter messages (CANNOT be used for training)

- development-test #2: 2,094 SMS messages (CANNOT be used for training)

Participants should note that there will be two test datasets, one composed of Twitter messages and another one from a surprise domain for which they would not be receiving explicit training data. The purpose of having a separate test set of a different genre is to see how well systems trained on Twitter data will generalize to other types of text.

IV. Evaluation

Each participating team will initially have access to the training and development datasets only. Later, the unlabelled test data will be released. After SemEval-2014, the labels for the test data will be released as well.

The metric for evaluating the participants’ systems will be average F-measure (averaged F-positive and F-negative, and ignoring F-neutral; note that this does not make the task binary!), as well as F-measure for each class (positive, negative, neutral), which can be illuminating when comparing the performance of different systems. We will ask the participants to submit their predictions, and the organizers will calculate the results for each participant. For each subtask, systems will be ranked based on their average F-measure. Separate rankings for each test dataset will be produced. A scorer is provided with the trraining datasets.

For each task and for each test dataset, each team may submit two runs: (1) Constrained - using the provided training data only; other resources, such as lexicons are allowed; however, it is not allowed to use additional tweets/SMS messages or additional sentences with sentiment annotations; and (2) Unconstrained - using additional data for training, e.g., additional tweets/SMS messages or additional sentences annotated for sentiment.

Teams will be asked to report what resources they have used for each run submitted.

V. Schedule

- Trial data ready October 30, 2013

- Training data ready December 15, 2013

- Test data released March 24, 2014

- Evaluation end March 30, 2014

- Paper submission due April 30, 2014

- Paper reviews due May 30, 2014

- Camera ready due June 30, 2014

- SemEval workshop August 23-24, 2014 (collocated with COLING'2014)

VI. Organizers

Sara Rosenthal, Columbia University

Alan Ritter, The Ohio State University

Veselin Stoyanov, Facebook

Preslav Nakov, Qatar Computing Research Institute

VII. References

Preslav Nakov, Zornitsa Kozareva, Alan Ritter, Sara Rosenthal, Veselin Stoyanov, and Theresa Wilson. 2013. In Semeval-2013 Task 2: Sentiment Analysis in Twitter. Proceedings of the 7th International Workshop on Semantic Evaluation. Association for Computational Linguistics

Barbosa, L. and Feng, J. 2010. Robust sentiment detection on twitter from biased and noisy data. Proceedings of Coling.

Bifet, A. and Frank, E. 2010. Sentiment knowledge discovery in twitter streaming data. Proceedings of 14th International Conference on Discovery Science.

Davidov, D., Tsur, O., and Rappoport, A. 2010. Enhanced sentiment learning using twitter hashtags and smileys. Proceedings of Coling.

Jansen, B.J., Zhang, M., Sobel, K., and Chowdury, A. 2009. Twitter power: Tweets as electronic word of mouth. Journal of the American Society for Information Science and Technology 60(11):2169-2188.

Kouloumpis, E., Wilson, T., and Moore, J. 2011. Twitter Sentiment Analysis: The Good the Bad and the OMG! Proceedings of ICWSM.

O’Connor, B., Balasubramanyan, R., Routledge, B., and Smith, N. 2010. From tweets to polls: Linking text sentiment to public opinion time series. Proceedings of ICWSM.

Pak, A. and Paroubek, P. 2010. Twitter as a corpus for sentiment analysis and opinion mining. Proceedings of LREC.

Tumasjan, A., Sprenger, T.O., Sandner, P., and Welpe, I. 2010. Predicting elections with twitter: What 140 characters reveal about political sentiment. Proceedings of ICWSM.

Janyce Wiebe, Theresa Wilson and Claire Cardie (2005). Annotating expressions of opinions and emotions in language. Language Resources and Evaluation, volume 39, issue 2-3, pp. 165-210.

VIII. Contact Person

Sara Rosenthal